(continued)

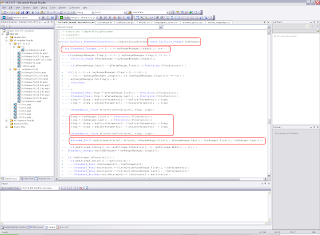

However this did not fully solve the problem of caching results. As I mentioned earlier, B-Splines are split into C2 intervals which are processed individually. For example, on one B-Spline there were 20 C2 intervals, so caching only worked within each of them but every time a new subrange of the another edge's range was called, all data were recalculated. So, I extended caching capabilities to support lists of caches and every time a new subrange of the 1st edge's range is used, points along subranges of the 2nd edge's range are retrieved from the cache. After this modification, D0() is called only 475K times, which is 570 times less comparing with initial baseline (273.2M). CPU time reduced by 2+x. Yes, I did it !

* New targets *

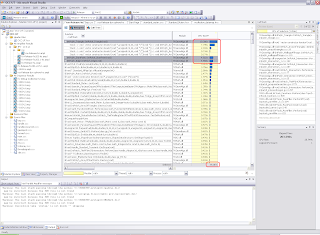

So, what remains ? Looking at new hotspots below, you can see that Extrema_ECCOfExtCC::Perform() remained among top 3.

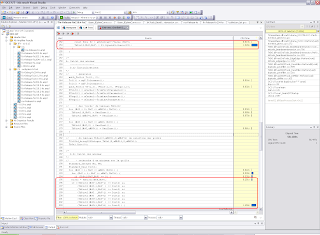

What's inside ? Looking at its top 3 hotspots (1st and 2nd are shown below, and the 3rd is similar to the 2nd) are connected with access to the array of doubles TbDist2 (containing square distances between sample points along two curves).

I suspect these are due to data latency, e.g. cache misses, i.e. when referenced data are not available in processor cache and they are loaded from RAM, which is time consuming. When some data are loaded from memory, some adjacent memory is also loaded by the processor (it assumes that you may need some of it and does this in advance). With TbDist2 this is not quite the case – because for each element [i, j] eight neighbors are checked ([i-1,j-1], [i-1,j]…[i+1,j+1]). Data for most of them are not adjacent in memory and therefore there are likely many misses here. And due to millions of calls (which as you correctly guess did not change after above modifications) result in a hotspot. I am not sure if there is anything that can be done with this, and I will probably have to talk to my Intel colleagues to check what can be done in a case like this. Perhaps the only possible solution lies upstream in reducing a number of subranges edges are split into. Today, after brief emails with the OCC team, I have no clues if/how this can be done. So, this will be up to them to continue in that direction.

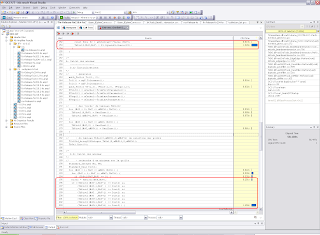

Two other hot-spots – PLib::EvalPolynomial(), BSplCLib::CacheD1() – are connected with calling first derivative on B-Spline curve. Geom_BSplineCurve::D1() is called when finding a root of function of minimizing a distance between two curves. BSplCLib::Bohm() is called 2.4M times and PLib::EvalPolynomial() – 30M times. I did not find the way to cache calculations (if you see such, please let me know) and probably the solution is also upstream.

* Next steps *

So, this where I am now, and frankly I am about to pause here ;-). I sent modifications to OCC for validation on the regression database (keep fingers crossed ;-)). If they are OK, I'm going to make similar modifications for 2D case to ensure consistency and perhaps some other polishing in Extrema for easier future maintenance.

Of course, there is a possibility to try multi-threading on BOPs but I am not yet up to this, as this will require deeper understanding of the algorithms and its concurrency possibilities. We'll see…

Meanwhile I'll try to post fixes on the SourceForge if anyone would like to try them. As usual, any feedback will be appreciated, especially if you witness performance improvements or degradation.

(end)

Please rate this post using the voting buttons below.

P.S.

After the fist article I received a few more test cases, and even more, a set of modifications in BOP on performance improvements. They have been made by one of OCC customer, registered here as Solar Angel. Per his estimations speed up in Cut (as common part seems not affected) was from a few minutes to a matter of seconds. Cool! I was excited to get these fixes and get in touch with the guy again after several years !

However this did not fully solve the problem of caching results. As I mentioned earlier, B-Splines are split into C2 intervals which are processed individually. For example, on one B-Spline there were 20 C2 intervals, so caching only worked within each of them but every time a new subrange of the another edge's range was called, all data were recalculated. So, I extended caching capabilities to support lists of caches and every time a new subrange of the 1st edge's range is used, points along subranges of the 2nd edge's range are retrieved from the cache. After this modification, D0() is called only 475K times, which is 570 times less comparing with initial baseline (273.2M). CPU time reduced by 2+x. Yes, I did it !

* New targets *

So, what remains ? Looking at new hotspots below, you can see that Extrema_ECCOfExtCC::Perform() remained among top 3.

What's inside ? Looking at its top 3 hotspots (1st and 2nd are shown below, and the 3rd is similar to the 2nd) are connected with access to the array of doubles TbDist2 (containing square distances between sample points along two curves).

I suspect these are due to data latency, e.g. cache misses, i.e. when referenced data are not available in processor cache and they are loaded from RAM, which is time consuming. When some data are loaded from memory, some adjacent memory is also loaded by the processor (it assumes that you may need some of it and does this in advance). With TbDist2 this is not quite the case – because for each element [i, j] eight neighbors are checked ([i-1,j-1], [i-1,j]…[i+1,j+1]). Data for most of them are not adjacent in memory and therefore there are likely many misses here. And due to millions of calls (which as you correctly guess did not change after above modifications) result in a hotspot. I am not sure if there is anything that can be done with this, and I will probably have to talk to my Intel colleagues to check what can be done in a case like this. Perhaps the only possible solution lies upstream in reducing a number of subranges edges are split into. Today, after brief emails with the OCC team, I have no clues if/how this can be done. So, this will be up to them to continue in that direction.

Two other hot-spots – PLib::EvalPolynomial(), BSplCLib::CacheD1() – are connected with calling first derivative on B-Spline curve. Geom_BSplineCurve::D1() is called when finding a root of function of minimizing a distance between two curves. BSplCLib::Bohm() is called 2.4M times and PLib::EvalPolynomial() – 30M times. I did not find the way to cache calculations (if you see such, please let me know) and probably the solution is also upstream.

* Next steps *

So, this where I am now, and frankly I am about to pause here ;-). I sent modifications to OCC for validation on the regression database (keep fingers crossed ;-)). If they are OK, I'm going to make similar modifications for 2D case to ensure consistency and perhaps some other polishing in Extrema for easier future maintenance.

Of course, there is a possibility to try multi-threading on BOPs but I am not yet up to this, as this will require deeper understanding of the algorithms and its concurrency possibilities. We'll see…

Meanwhile I'll try to post fixes on the SourceForge if anyone would like to try them. As usual, any feedback will be appreciated, especially if you witness performance improvements or degradation.

(end)

Please rate this post using the voting buttons below.

P.S.

After the fist article I received a few more test cases, and even more, a set of modifications in BOP on performance improvements. They have been made by one of OCC customer, registered here as Solar Angel. Per his estimations speed up in Cut (as common part seems not affected) was from a few minutes to a matter of seconds. Cool! I was excited to get these fixes and get in touch with the guy again after several years !